Object Detection with YOLOv3

Object detection is a task that involves identifying the presence, location, and type of one or more objects in a given dataset, and builds upon methods for object recognition, localization, and classification.

One of the notable deep learning techniques for achieving state-of-the-art results for object detection is the “You Only Look Once,” or YOLO, family of convolutional neural networks. The YOLO family of models are a series of end-to-end deep learning models designed for fast object detection, developed by Joseph Redmon, et al., and first described in You Only Look Once: Unified, Real-Time Object Detection (see Publications for links and other references).

The approach involves a single deep convolutional neural network that splits the input into a grid of cells and each cell directly predicts a bounding box and object classification. The result is a large number of candidate bounding boxes that are consolidated into a final prediction by a post-processing step. There are three main variations of the approach — YOLOv1, YOLOv2, and YOLOv3. The first version proposed the general architecture, whereas the second version refined the design and made use of predefined anchor boxes to improve bounding box proposal, and version three further refined the model architecture and training process.

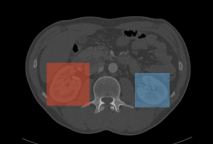

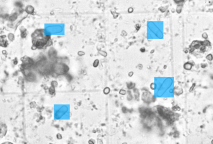

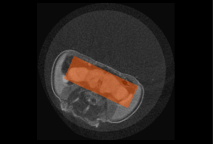

The results of applying a YOLOv3 object detection model are a series of 2D boxes around the objects of interest, as shown in the following examples.

Kidneys

Yeast cells

Drosophila brain

The following publications provide additional information about YOLOv3.

You Only Look Once: Unified, Real-Time Object Detection (https://arxiv.org/abs/1506.02640)

YOLOv3: An Incremental Improvement (https://arxiv.org/pdf/1804.02767.pdf)